Latest from Queryloop

Stay updated with our latest research findings, product developments, and insights into AI optimization

Stay updated with our latest research findings, product developments, and insights into AI optimization

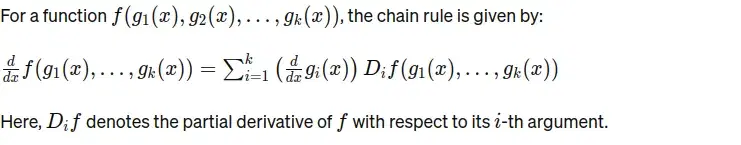

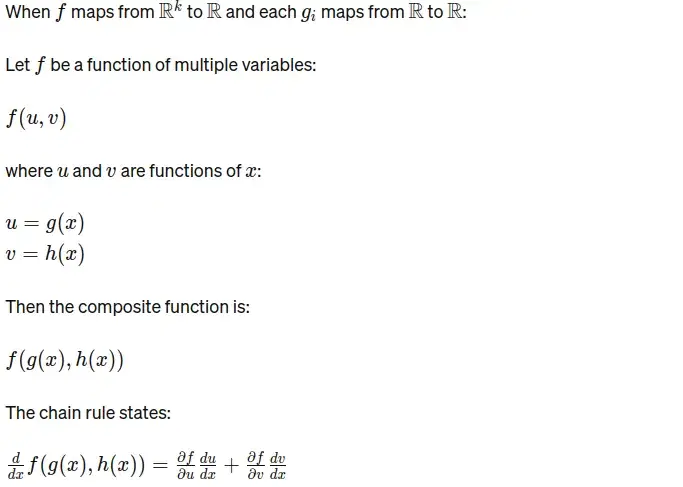

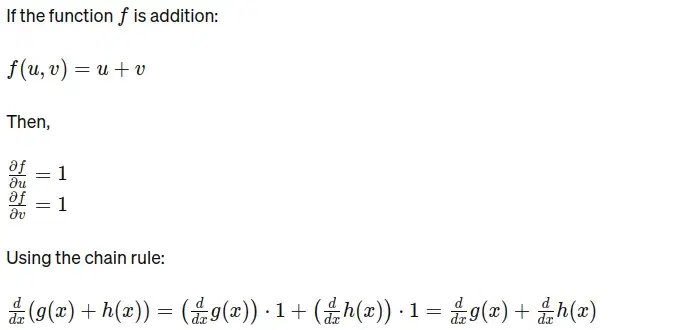

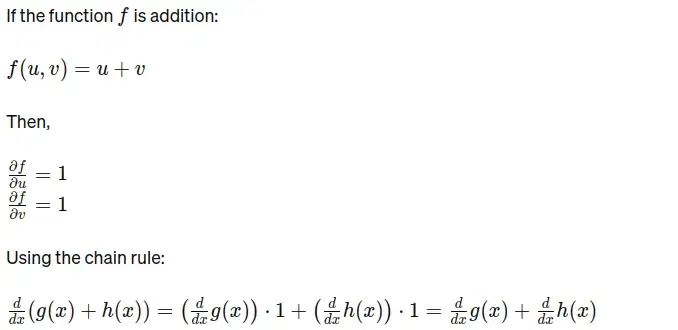

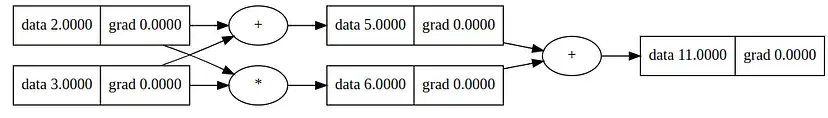

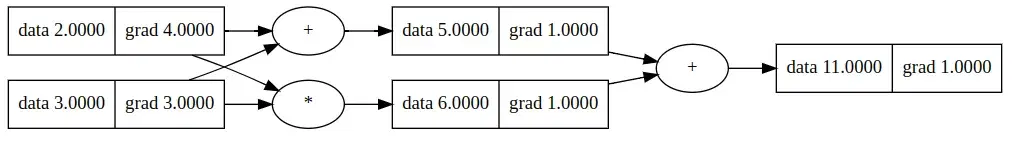

A comprehensive explanation of Andrej Karpathy's Micrograd implementation with mathematical concepts and object-oriented programming.

Neural Networks: Zero to Hero by Andrej Karpathy focuses on building neural networks from scratch, starting with the basics of backpropagation and advancing to modern deep neural networks like GPT.