Latest from Queryloop

Stay updated with our latest research findings, product developments, and insights into AI optimization

Stay updated with our latest research findings, product developments, and insights into AI optimization

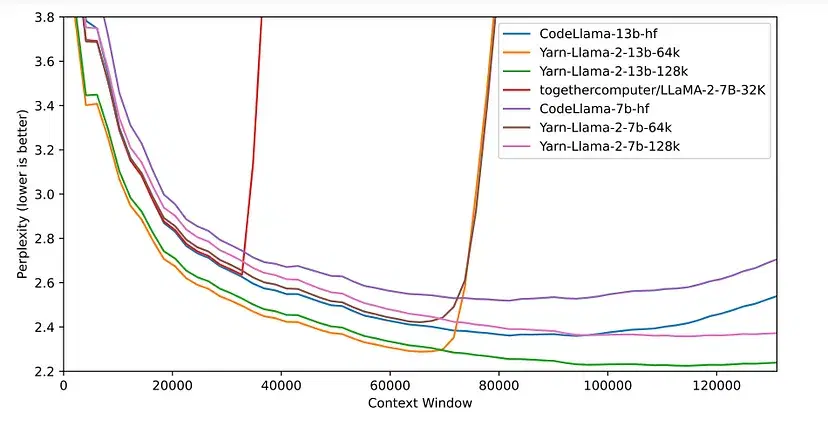

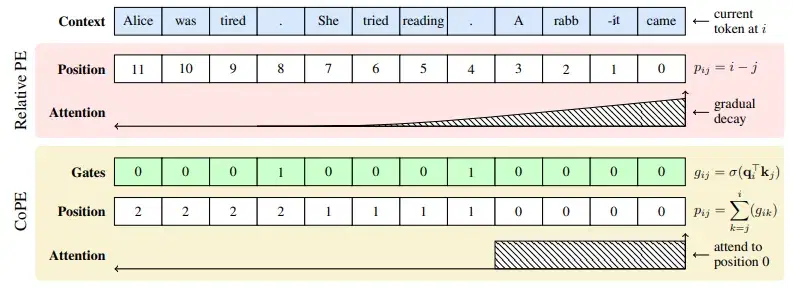

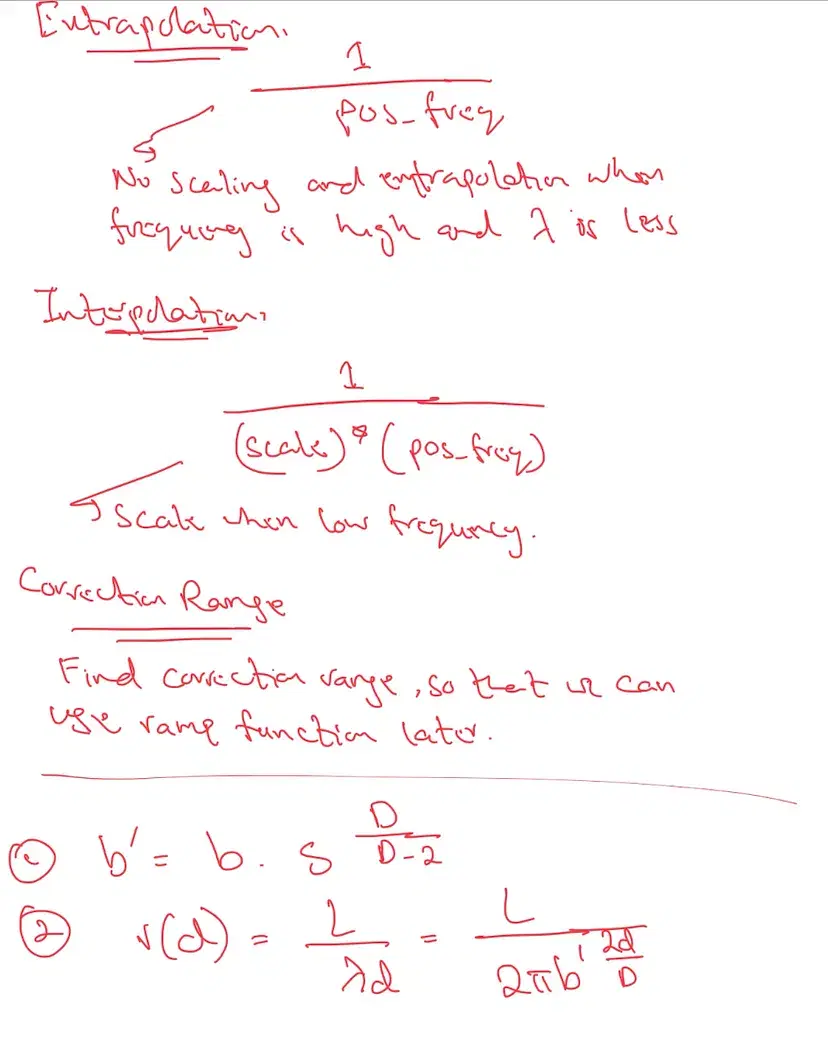

Comparison of various positional embeddings.

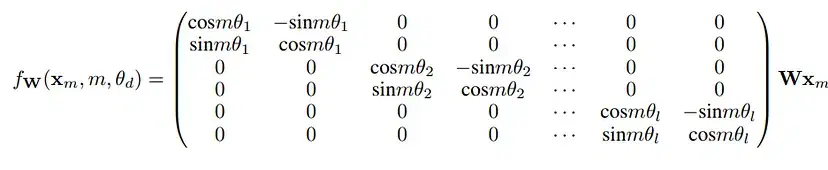

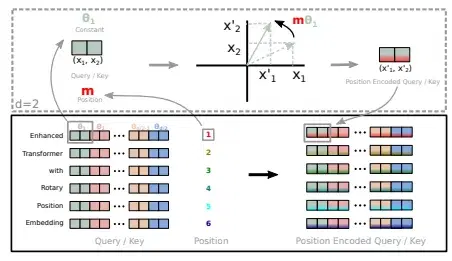

When processing sequences such as text, the ordering information is clearly critical. To incorporate ordering information and rather not treat sequences as sets, encoding position information is vital. Positional encoding achieves this by assigning an embedding vector to each position and adding that to the corresponding token representations. There have been many Positional Encoding techniques introduced: Absolute-PE counts tokens from the start of a sequence, Relative-PE counts backward starting at the current token. We will discuss some of the more advanced position encoding methods: RoPE and its variants (Linear, NTK, YaRN), CoPE.